K8s Dynamic StorageClass Provisioner with Azure Files

UseCase: Application deployment with more than 1 replicas(pods), assuming that the application can have shared storage space to attempt "accessModes: ReadWriteMany"

Note: Storage can be provisioned statically or dynamic way, this example demonstrates the dynamic way of provsioning.

Azure Files can be used to mount an SMB share, backed by an Azure Storage account to pods. Files let you share data across multiple nodes and pods. Simply multiple writes can happen unlike AzureDisk to dedicate each piece of storage to a single PV.

Two Types of Performance options provided by Azure:

1. Files can use Azure Standard storage backed by regular HDDs

2. Azure Premium storage, backed by high-performance SSDs.

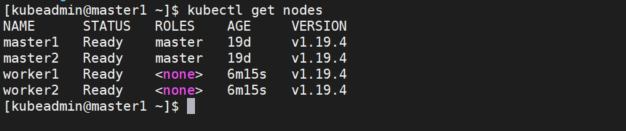

Step1: We can use one of the four default SC(storage classes) provided by Azure, but here I am trying to demonstrate how a customized SC can refer to an existing storage account. For that, I am creating my storage account first.

Default StorageClasses:

Using Azure CLI:

$ az storage account create --name dynamicpv1 \

--resource-group mc_pvtest_pvaks_eastus \

--access-tier Hot \

--kind StorageV2 --sku Standard_LRS \

--tags dynamicpv:pvaks

--name: Name of the Storage account

--resource-group: Name of the resource group you intend to use # Note: by default all the storage accounts are created under the AKS node resource groups, you can change to a different resource group by mapping the Resource Id, I will use the default node group.

--access-tier: Hot vs cool vs Archive, based on the usage we can select. if none mentioned then Default "Hot" will be picked for GPv2. Find more detailed info from official page Access-tier

--kind: Type of storage account to use, more info from Types of Storage accounts

--tags: (optional) but recommended to make habit of assigning proper tags for future references

ex: akscluster:prod, akscluster:dev, akscluster:uat

$ az storage account list --resource-group mc_pvtest_pvaks_eastus -o table

Step2: Use the created storage account reference as below.

Create the StorageClass with azure-file provisioner:

cat << EOF > dynamicSC.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: file01dynamic

labels:

kubernetes.io/cluster-service: "true"

provisioner: kubernetes.io/azure-file

mountOptions:

- dir_mode=0777

- file_mode=0777

- uid=0

- gid=0

- mfsymlinks

- cache=strict

parameters:

skuName: Standard_LRS

storageAccount: dynamicpv1

location: eastus

apiVersion: storage.k8s.io/v1

metadata:

name: file01dynamic

labels:

kubernetes.io/cluster-service: "true"

provisioner: kubernetes.io/azure-file

mountOptions:

- dir_mode=0777

- file_mode=0777

- uid=0

- gid=0

- mfsymlinks

- cache=strict

parameters:

skuName: Standard_LRS

storageAccount: dynamicpv1

location: eastus

EOF

$ kubectl create -f dynamicSC.yaml

check the output using the below command:

check the output using the below command:

$ kubectl get sc

Step3: Create the PVC, and check the PV status that created automatically as part of the PVC request.

cat <<EOF> pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: azurefile

spec:

accessModes:

- ReadWriteMany

storageClassName: file01dynamic

resources:

requests:

storage: 5Gi

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: azurefile

spec:

accessModes:

- ReadWriteMany

storageClassName: file01dynamic

resources:

requests:

storage: 5Gi

EOF

$ Kubetcl create -f pvc.yaml

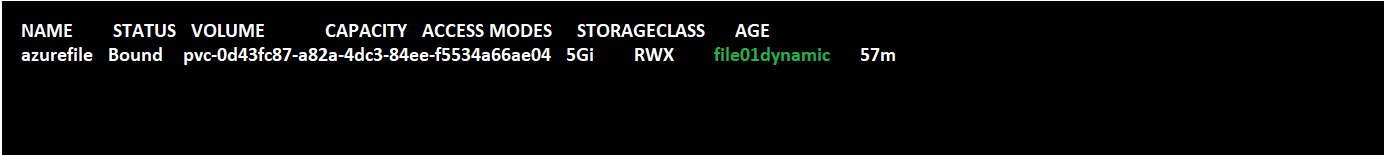

$ kubectl get pvc

$ kubectl get pv

Step4: Now create the Deployment with 3 replicas as below.

cat <<EOF> nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-file

labels:

app: nginx-file

spec:

replicas: 3

selector:

matchLabels:

app: nginx-file

template:

metadata:

labels:

app: nginx-file

spec:

containers:

- name: nginx

image: nginx:latest

command: ["/bin/sh"]

args: ["-c", "for number in 1 2 3 4 5; do touch /mnt/pvfiletest/file$number; done"]

ports:

- containerPort: 80

volumeMounts:

- name: pvtest

mountPath: /mnt/pvfiletest

volumes:

- name: pvtest

persistentVolumeClaim:

claimName: azurefile

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-file

labels:

app: nginx-file

spec:

replicas: 3

selector:

matchLabels:

app: nginx-file

template:

metadata:

labels:

app: nginx-file

spec:

containers:

- name: nginx

image: nginx:latest

command: ["/bin/sh"]

args: ["-c", "for number in 1 2 3 4 5; do touch /mnt/pvfiletest/file$number; done"]

ports:

- containerPort: 80

volumeMounts:

- name: pvtest

mountPath: /mnt/pvfiletest

volumes:

- name: pvtest

persistentVolumeClaim:

claimName: azurefile

EOF

$ kubectl create -f nginx-deployment.yaml

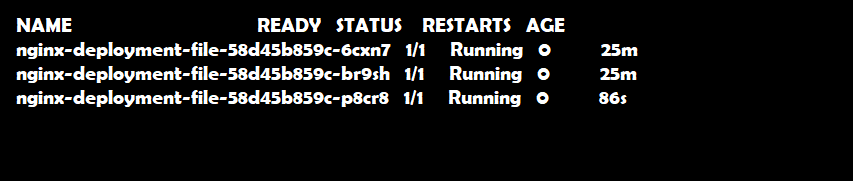

$ kubectl get pods

$ kubectl get pods

Step5: Check the azure storage account for the created file. Also, check each pod to see if the files are shared among them.

Step6: Delete the pods and check the storage. we are using external volumes to preserve the data even after Pods got crashed or deleted.

Delete the Pod manually, since the pods are part of the ReplicaSet, it should be recreated for us by the controller manager(replication controller).

$ kubectl get pods -w

Step7: Exec the pod and check the mount "mnt/pvfiletest" contents.

$ kubectl exec -it nginx-deployment-file-58d45b859c-p8cr8 ls /mnt/pvfiletest

Step: Now let us delete the deployment and check whether the data available in our external storage or not?

$ kubectl delete deploy/nginx-deployment-file

$ kubectl get deploy

Finally, let us check the data persistence from the Azure Portal view.

Conclusion: A PersistentVolume can be created by a cluster administrator which consider as static volumes or It can be created by the Kubernetes API server called Dynamic provisioning. If a pod is scheduled/deployed and requests storage that is not currently available, Kubernetes can create the underlying Azure Disk or Files storage and attach it to the pod.

This Dynamic provisioning uses a StorageClass Object to identify what type of Azure storage needs to be created.

Choosing the type of storage depends on application and persistence scenarios.

Best casinos in Canada 2021 - MapyRO

ReplyDeleteCheck the top casinos in 경산 출장샵 Canada for the best 경기도 출장샵 online 평택 출장샵 casinos The best casino games in the 평택 출장안마 world are here: roulette, craps, 전라북도 출장마사지 blackjack,